Fabrice NEYRET - Maverick team, LJK, at INRIA-Montbonnot (Grenoble)

The

overall goal of the larger

Galaxy

project is the real-time walk-through the galaxy with Hubble-like

visual quality. Of course we cannot store explicitly the whole galaxy

in ultra high-resolution volume of voxels. Procedural noise like

Perlin

noise allows movies and video game artists to generate on the fly

very detailed natural-looking stochastic textures, or even volumetric

density fields. Alas they are uneasy to control finely. In

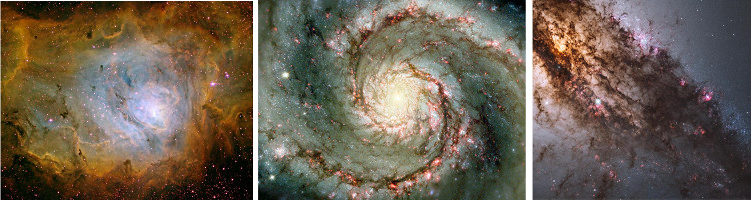

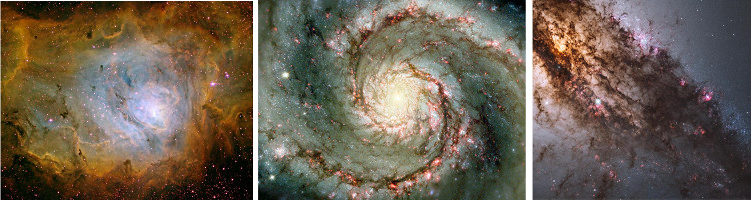

particular, many real-world textures are anisotropic (see images

above), which requires to specify varying stretch amount and

direction everywhere.

Last

year we

studied an approach where the volumetric

noise

texture is mapped inside proxy-shapes, e.g., deformable tubes: the

embedding takes

care of the variations of direction and stretch (see

results).

This is promising, but it gets

costly to determine the local coordinates in deformed tubes on

the fly,

especially when the nebula

or dust cloud

combines

many

of them; plus not all chunks of dust fields look like tubes. This

year we want to explore the

precalculation of a volumetric mapping

field where (u,v,w)

is

stored at a the nodes of a low-res 3D grid and tri-linearly

interpolated in space, thus defining anisotropic deformations at no

cost at render time, with just the volume marching + local Perlin

noise evaluation to proceed.

The issue is then how to “paint”

this mapping field ( + possibly other parameters ), and to

manage the domain bounding information so as to allows multiple

curved noise “strokes” + voids in the same volume area. Also we

have to estimate the distortion due to the trilinear interpolation,

and the cost of either extra resolution or costlier interpolation to

avoid it. Exploring the possibilities of animating the mapping field

is also an interesting target since these astronomical objects are

evolving. This was trivial using the previous approach, but with the

present one we have to efficiently reconstruct the mapping field at

every frame. Also, the presence of this explicit field settling the

noise domains could be used to skip the empty space and accelerate

the volume rendering.

Note that the rendering is done on the

GPU, usually via GLSL shaders.

Cf links in the description.

C/C++ .

GLSL shading language or equivalent would be a plus.

Having already coded a renderer would be a plus.